AI code generation transformed how quickly my teams could ship features. We went from week-long implementations to same-day deployments. The velocity was real and initially, it felt like a competitive advantage.

Then we started scaling.

What worked perfectly for solo projects or small features became problematic under real production pressure: multiple developers working on interconnected systems, tight deployment windows, security audits, and clients who now expected “we can have this live tomorrow since we’re using AI.”

The code was being generated faster than we could maintain it. Technical debt was accumulating at a pace I hadn’t seen before.

That’s when I started experimenting with spec kits. Not as formal process documentation, but as a lightweight framework to keep AI code generation productive without letting our codebase fragment. Here’s what I learned.

Why AI Code Generation Needs Structure

In my recent projects, I kept running into the same patterns once AI was involved at scale.

Common Pain Points with AI-Generated Code

- Inconsistent patterns across the codebase. You get 20 files touched, two or three competing patterns, and duplicated logic. The feature technically works, but it feels fragile and painful to maintain.

- Big refactors that shouldn’t have been necessary. Without clear requirements, AI fills gaps with assumptions. I’ve seen features get built to 80% completion, then require significant rework when edge cases, security requirements, or performance constraints become apparent.

- Security vulnerabilities introduced silently. AI code generation tools are trained on public code, which means they can reproduce common security anti-patterns: hardcoded credentials, SQL injection vulnerabilities, missing input validation, and insecure authentication flows.

- Lack of best practices enforcement. Error handling is inconsistent. Logging and observability get skipped. Tests are superficial or missing entirely. The code runs, but it’s not production-ready.

While AI excels at code generation, it struggles with long-term consistency. It often loses track of context, confidently inserts unapproved details, and defaults to a “demo mode” output when a production-ready solution for “real users” is actually required. Once it starts drifting, you end up with subtle security gaps, random architecture decisions, and a codebase that feels stitched together.

Spec kits have been working as a stabilizer for me. They help keep decisions consistent and make reviews easier, turning AI into a more reliable execution assistant as opposed to a chaotic code generator. They are not the catch-all fix when using AI for coding, but a good aid.

What Is a Spec Kit?

A spec kit is a lightweight set of documentation that answers three questions before code gets written:

- What are we building?

- How are we building it?

- How do we know when it’s done?

If you prompt AI with something vague like “build an app with auth, a dashboard, and analytics,” it will generate a nice-looking demo with numerous assumptions. That’s the trap—it looks productive, but it’s not predictable.

Spec kits force the important decisions to exist upfront: product intent, user flows, acceptance criteria, technical decisions, task breakdown, and the edge cases nobody wants to think about until they’re in production at 2am.

The funny part is: this doesn’t slow me down. It speeds things up. Because I stop rebuilding the same feature three times in three different ways.

How Spec Kits Fit Into the AI-Augmented SDLC

The teams I work with who are getting the most value from AI code generation aren’t using it as a replacement for engineering judgment. They’re using it as an accelerator within a structured SDLC. Spec kits support this by providing clarity at each critical phase.

- Requirements and Planning: AI can help draft user stories and surface potential technical challenges early. But product decisions, prioritization, and defining “done” still require human judgment.

- Architecture and Design: AI can propose implementation approaches, but architectural decisions—data ownership, service boundaries, security models—need to be locked in by experienced engineers before code generation begins.

- Implementation: This is where AI code generation shines. Once requirements and architecture are clear, AI can rapidly generate boilerplate, implement defined patterns, and accelerate routine coding tasks.

- Code Review: AI-generated code still needs human review, but the focus shifts. Instead of checking syntax, reviewers focus on architectural consistency, security implications, and whether the implementation actually solves the stated problem.

- Testing and QA: AI can write unit tests and integration tests quickly, but test strategy—what to test, how much coverage is enough, what edge cases matter—requires engineering experience.

The pattern I keep seeing: AI accelerates execution, but strategy, standards, and judgment still come from your team.

A Practical Spec Kit Workflow

Here’s the workflow I’m using right now with my teams:

I start with outcomes, not features. Before building anything, I force myself to answer: what’s the outcome? Who is this for? What problem are we solving? What does success look like?

Not in a “vision board” way. In a concrete way.

Like: user uploads 4 images and gets a result in under 90 seconds. Admin can review and export results. Actions are tracked. Errors are visible. The system can be debugged.

If I don’t lock this in early, AI will gladly fill the gaps with whatever sounds reasonable (then I spend hours undoing it later.)

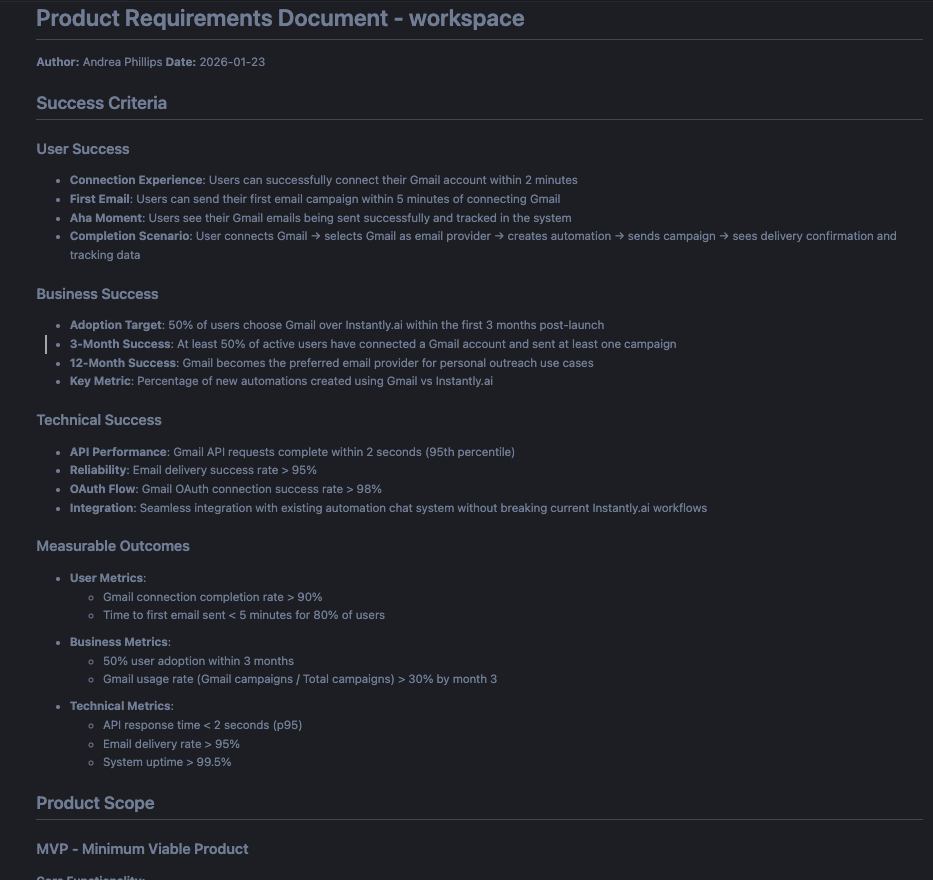

Measurable outcomes – the PRD section that prevents scope creep

Then I define the surface area to prevent scope creep. This is where I list what’s actually in the product:

- Screens/pages

- Primary actions

- Roles/permissions

- System objects (data model)

- Integrations (Supabase, Stripe, OpenAI, internal APIs, etc.)

This step saves me from accidental architecture. It also stops AI from inventing extra systems nobody asked for.

I write a lightweight PRD (just enough to stop guessing). I’m not trying to write a novel. I just want the PRD to remove ambiguity.

The structure I keep coming back to is:

- Overview

- Goals/non-goals

- User stories

- Requirements

- Acceptance criteria

- Out of scope

- Risks/open questions

Acceptance criteria is the part I care about most. If “done” isn’t explicit, the model will decide for you and we already know how that goes.

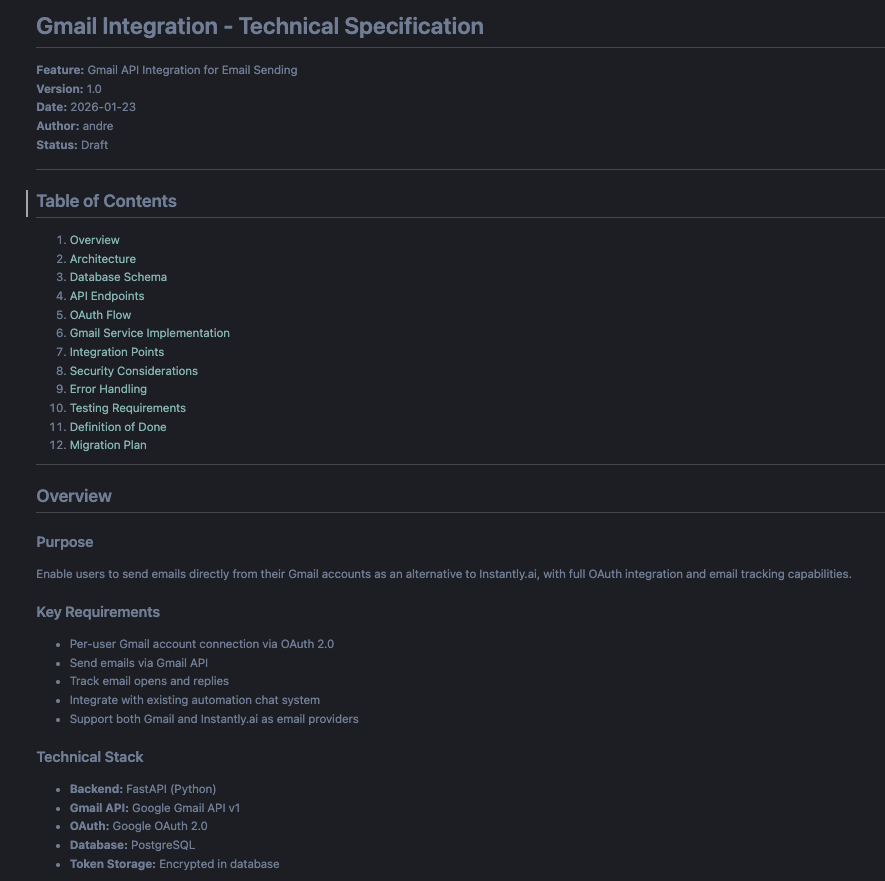

Before the AI writes a single line of code, we lock the architecture, APIs, and DoD

Add a technical spec so engineering doesn’t turn into vibes. This is where I write down the “engineering reality” stuff:

- Architecture shape

- Data ownership

- Auth rules

- Endpoints

- Async jobs / background processing

- Error handling

- Logs/metrics

- Deployment constraints

I’m not trying to over-design—I’m just locking the handful of decisions that keep things coherent. Without that, the system slowly becomes a Frankenstein.

Break the work into PR-sized tasks. This has been the biggest unlock for me. Instead of asking AI to “implement the whole feature,” I break it into pieces that can be reviewed cleanly.

Like: build the API route with validation + errors, implement the UI upload flow, add polling with retries, write tests for parsing, add authorization checks.

If it can’t be reviewed in a single PR without pain, the task is too big.

I use AI in draft mode, not authority mode. This is the mental model that’s been working best for me: AI drafts, engineers approve.

I let it generate code quickly, refactor, write tests, document functionality. But I don’t treat it as the final judge of correctness. Otherwise you end up merging things because they sounded right, not because they actually are right.

Keep tightening the spec with feedback loops. After AI proposes an implementation, I try to force reality back into it.

I’ll ask:

- What fails here?

- What edge cases did we miss?

- What happens if the user refreshes mid-flow?

- Where should we log and alert?

- What would an attacker try?

Then I update the spec. Because for me, specs aren’t static—they evolve as the system becomes real.

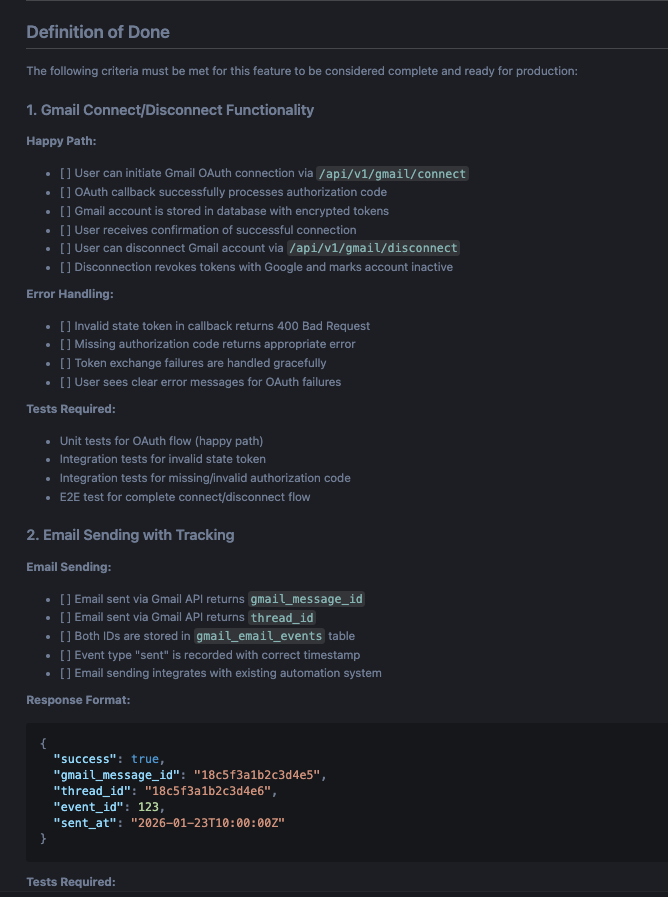

Definition of Done: the guardrails that keep AI-generated code production-ready.

Prompts That Have Been Actually Useful

Once the spec kit exists, my prompts stop being random and start being productive. Stuff like:

- “Ask me the questions you need before writing the PRD.”

- “Break this into PR-sized tasks with acceptance criteria and test plans.”

- “Only implement what’s stated and ask if something is missing.”

- “List failure modes and propose mitigations.”

- “Write tests first, then implement, and explain how to verify locally.”

Simple prompts, but they massively improve the quality of what comes back.

Making Spec Kits and AI Code Generation Sustainable

AI code generation is powerful, but capability without process creates technical debt and unrealistic expectations.

What I’ve found is that teams shipping high-quality software with AI and spec kits aren’t working faster by cutting corners—they’re working faster by frontloading the decisions that matter:

- Clear requirements and acceptance criteria

- Locked technical architecture and patterns

- Reviewable task breakdown

- Human oversight on security, performance, and maintainability

- Feedback loops that improve specs over time

This doesn’t slow teams down. It prevents the costly rework that happens when AI builds the wrong thing quickly.

Spec kits provide the structure to make AI code generation predictable, maintainable, and secure. They’re not heavyweight documentation—they’re a practical approach to keeping AI useful without letting the codebase drift into chaos.

When to Bring In Expert Help

Even with spec kits in place, many teams discover a new bottleneck: they need experienced engineers who know how to architect systems that AI can implement consistently.

The challenge shifts from “we need more developers” to “we need the right expertise”:

- Senior engineers who can define architecture, establish patterns, and review AI-generated code for security and performance implications

- Technical architects who can design systems that scale and prevent the fragmentation that happens when AI generates code without clear guardrails

- DevOps and platform engineers who can build CI/CD pipelines, enforce standards, and create the infrastructure that makes AI-augmented development sustainable

- Security specialists who can audit AI-generated code for vulnerabilities and establish secure coding patterns

If your team is integrating AI code generation and finding that the bottleneck isn’t implementation speed but rather architectural decisions, code review capacity, or specialized technical expertise, staff augmentation can accelerate your roadmap more effectively than adding general-purpose developers.

At AgilityFeat, we help teams scale strategically by bringing in senior technical talent to establish patterns, review AI-generated code, and architect systems that your team—and AI—can build on confidently.

Need help establishing spec-driven workflows for AI code generation—or want to work with great nearshore developers like Andrea? Talk to AgilityFeat about staff augmentation that fits your technical needs.

Further Reading:

- Automating Translation Workflows: AI as an Engineering Multiplier

- When Is the Right Time to Scale Your Software Development Team?

- Invest in AI People, Not Just AI Projects

- How to Hire LLM Engineers: Why Latin America Solves the AI Talent Crisis

- Building Investable AI Startups: The Value of Nearshore Development Partners

- What Is a Prompt Engineer Tester—and Why Your Team Might Need One

- Ten Reasons Your Technical Team Will Keep Growing – Despite AI