Building software to manipulate files is always tricky, however when we are talking about audio files and specifically about the Web Audio API in HTML5, this challenge gets significantly more difficult. In this post, we will look at the basics you need to know to get started recording and manipulating audio in the browser with the Web Audio API.

Browser Compatibility

There are many things to take into consideration, starting with audio file and browser compatibility. Although browsers are getting more standardized, there are still syntax nuances for particular calls that you need to be aware of when using the Web Audio API. For example, here is the way to execute the call for GetUserMedia in Chrome, Android, Firefox and Internet Explorer (notice the difference in prefixes);

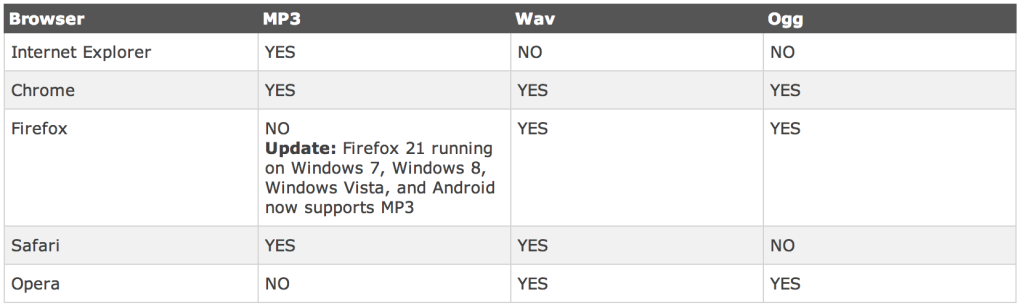

Also, there are differences between browser type in terms of the files types that each browser can play.

You will want to specify a base case or cases for the file types you want to enable. For our example we took a “democracy” approach which means using the audio format that is compatible with most browsers. We decided to use Wav files.

The first thing we do is check file type and if it is not supported, run a process to convert it to Wav. In order to determine whether or not a particular file type will play, you can use the canPlayType method of audio and/or video objects:

Here’s the sample code:

And here is a list of common values for the canPlayType method:

Lastly, The possible return values from that call are:

So, when canPlayType returns an empty string, we convert the file.

Understanding the HTML5 Web Audio API

Before the HTML5 Web Audio API, we used the audio tag to interact with audio files, calling play, pause or stop. Now the Web Audio API opens a whole new set of opportunities via audio context. An audio context controls the creation of the nodes it contains and the execution of the audio processing, or decoding.

Put more simply, the audio context let’s you manage sounds. In our example, we will create a single audio context for our application (more on that later).

A super important part of the HTML5 Web Audio API are the AudioNodes. AudioNodes are the basic units of AudioContext. The AudioNodes represent audio sources, the audio destination, and intermediate processing modules. We’ll talk more about AudioNodes in our next post.

Here is a list of the AudioNodes we used:

It’s worth noting that some browsers limit the quantity of AudioContext instances you can create so we are only creating a single AudioContext instance for our application.

When we are working with audio files we do not use the actual Wav file, we load the audiofile but get an arraybuffer. From there we can start manipulating the file.

Here’s how it works … We use the XMLHTTPRequest object to make a call to the file url we want to load, and that will give us access to the arraybuffer of that file:

As you can see in the snippet of code we are setting the responseType to ‘arraybuffer’. Other possible values of the responseType attribute are “blob”, “document”, “json”, and “text”. See here for more information on response types for the calls.

Audio quality is important and with the arraybuffer we can create a new audio file to be played that we can manipulate. We can set the quality of the audio by specifying the sample rate.

Sample rate is the number of samples of audio carried per second, measured in Hz or kHz (one kHz being 1 000 Hz). For example, 44 100 samples per second can be expressed as either 44 100 Hz, or 44.1 kHz. So, if you need to increase the quality of a sound, you can increase the sample rate.

However, it is also important to keep bandwidth in mind. If you are going to be uploading and downloading the files you might want to record at a lower sample rate like 20kHz (and use that as a source) to reduce the file size and CPU use.

In the example above, we use the arraybuffer coming from the XMLHTTPRequest (V2).

Once we have the arraybuffer, we create a blob. To create a new blob, the constructor needs a new instance of dataView and that’s created from the array buffer.

It is important to know that we use an instance of OfflineAudioContext object which is similar to the audioContext object. The difference is that the OfflineAudioContext will generate the audio content faster in a buffer.

At this point, you have laid the groundwork for recording and manipulating sound via the Web Audio API. In our next post in the series, we’ll dig into the final steps to take to complete the project.